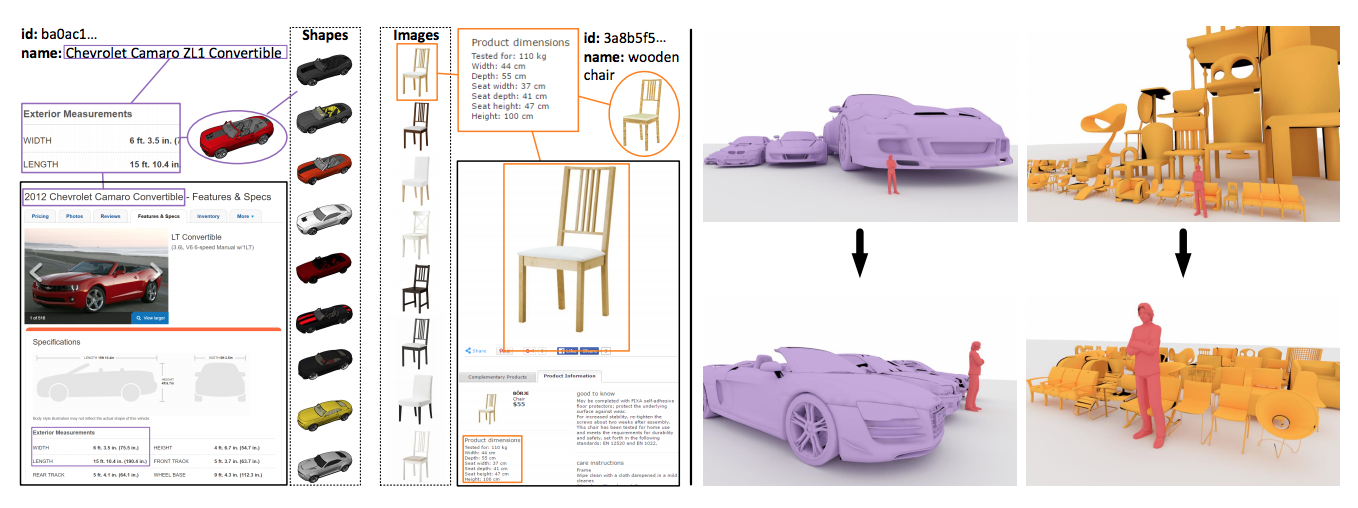

Figure 1.: Left: we connect 3D models to webpages with physical attributes. Our approach transfers real-world dimensions by cross-modal linking through text or images (purple and orange links correspondingly). Right: we transfer real-world dimensions to a 3D model dataset and rectify physically implausible model scales |

We present an algorithm for transferring physical attributes between webpages and 3D shapes. We crawl product catalogues and other webpages with structured metadata containing physical attributes such as dimensions and weights. Then we transfer physical attributes between shapes and real-world objects using a joint embedding of images and 3D shapes and a view-based weighting and aspect ratio filtering scheme for instance-level linking of 3D models and real-world counterpart objects. We evaluate our approach on a large-scale dataset of unscaled 3D models, and show that we outperform prior work on rescaling 3D models that considers only category-level size priors.

Paper | Data

@inproceedings{lin2017crossmodal,

title={Cross-modal Attribute Transfer for Rescaling {3D} Models},

author={Shao, Lin and Chang, Angel X. and Su, Hao and Savva, Manolis and Guibas, Leonidas},

booktitle = {Proceedings of the International Conference on {3D} Vision ({3DV})}, year={2017}

}